Enhance release control with AWS CodePipeline stage-level conditions

October 17, 2024It’s an established practice for development teams to build deployment pipelines, with services such as AWS CodePipeline, to increase the quality of application and infrastructure releases through reliable, repeatable and consistent automation.

Automating the deployment process helps build quality into our products by introducing continuous integration to build and test code, however enterprises may sometimes wish to implement certain conditions such as an approved deployment window to ensure more fine-grained control over pipeline executions.

AWS CodePipeline recently added stage-level conditions to implement pipeline gates. In this blog post, we will cover how you can implement stage-level conditions to improve your governance, code quality, and security by following a simple pipeline scenario detailed in the sections below.

AWS CodePipeline

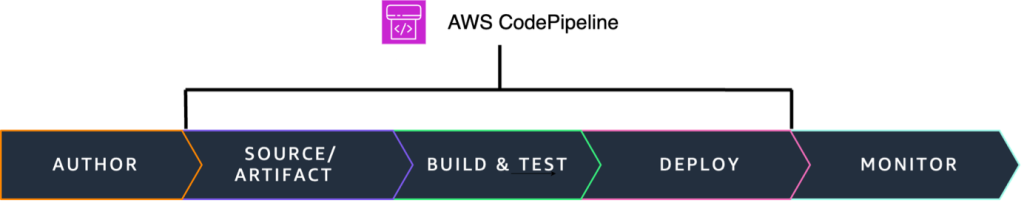

AWS CodePipeline is a fully managed continuous delivery service that helps you automate your release pipelines for fast and reliable application and infrastructure updates.

Figure 1. AWS CodePipeline orchestration

CodePipeline has three core constructs:

- Pipelines – A pipeline is a workflow construct that describes how software changes go through a release process. Each pipeline is made up of a series of stages.

- Stages – A stage is a logical unit you can use to isolate an environment and to limit the number of concurrent changes in that environment. Each stage contains actions that are performed on the application artifacts.

- Actions – An action is a set of operations performed on application code and configured so that the actions run in the pipeline at a specified point.

A pipeline execution is a set of changes released by a pipeline. Each pipeline execution is unique and has its own ID. An execution corresponds to a set of changes, such as a merged commit or a manual release of the latest commit.

You can now configure stage-level conditions to gate a pipeline execution before entering the stage, as well as when exiting a stage for both success and failure state. A condition consists of one or more rules, and a result (such as rolling back) to apply when the condition fails. Conditions are also referred to as gates because they allow you to specify when an execution will enter and run through a stage or exit the stage after running through it.

You can configure a stage-level condition from the console, API, CLI, AWS CloudFormation, or SDK. For the purposes of this blog post, we will showcase how you can configure the two gate scenarios using the console. You can see how you can create conditions using the CLI in the documentation.

Scenario

For this blog, we started with a four stage Pipeline based off the Amazon ECS deployment example from this tutorial. While this tutorial provides a getting started example on how to build a CI/CD pipeline for an ECS application, it lacks the quality gates we want to enforce to ensure what we deploy to production is safe and tested. For this we will turn to CodePipeline stage-level conditions to verify certain criteria is met throughout the pipeline execution.

In our environment, we started with a source repository in a Github organization and used AWS CodeConnections to connect the source stage to our pipeline. Inside our repository are files for a sample application, a Dockerfile to build our application, and a buildspec.yaml file to define the steps that will be executed in the build stage of our pipeline. We added a fourth stage to our pipeline which separated production deployments after a successful deployment to the staging environment. We released a change using this pipeline to ensure everything deployed as expected.

Figure 2: Example of a 4-Stage CodePipeline

Applying Stage Conditions

One of the primary concerns in any development process is maintaining high code quality standards. With stage-level conditions, you can now include specific checks to verify that your organization’s security and governance standards are met throughout the entire execution of your pipelines. These checks can include restricting deployments to certain days of the week, monitoring Amazon CloudWatch alarms prior to progressing, and verifying the results of security testing before promoting code as examples.

The AWS CodePipeline documentation details what conditions are available and how they can be used.

You can add conditions to stages on the pipelines detail page by choosing Edit and then selecting Edit stage from the relevant stage.

Figure 3: Adding a Stage Condition in the AWS console

In our pipeline, we added rules that address three scenarios:

- Scenario 1 – “On Success” condition that fails if the Amazon Elastic Container Registry (Amazon ECR) image scanning detects a critical severity in the Docker image in the build stage.

- Scenario 2 – “On Failure” condition that rolls back a deployment stage in the event a CloudWatch alarm triggers after a successful deployment.

- Scenario 3 – “Entry” condition to prevent deploying to production on Fridays, Saturdays and Sundays

Scenario 1 – “On Success” condition

For the first gate we implemented an OnSuccess exit condition that would fail the execution using the AWS Lambda Invoke rule provider. If you would like to dive deeper into this implementation, you can take a look at Logging image scan findings from Amazon ECR in CloudWatch using an AWS Lambda function.

Figure 4: Lambda invoke rule in the console

Before we added this condition rule, we created a Lambda function in the same region that was responsible for getting the findings from an image scan that Amazon ECR executed. If a critical finding was detected in the image, the Lambda function will fail the condition. You can read more about utilizing Lambda with CodePipeline in the documentation. The LambdaInvoke rule is able to use input artifacts to implement additional logic in the function. Here we passed in the artifact created during the build stage which contains the ECR image name and tag. This pattern allows you to re-use Lambda functions as conditions across many pipelines.

After configuring the rule, we reviewed our stage configuration and saved the pipeline.

Figure 5: Adding a stage condition to the build stage

Scenario 2 – “On Failure” condition

Next, we configured a condition to fail and rollback a deployment in our staging environment. If you would like to read more about implementing rollback in your CodePipeline pipelines, you can refer to De-risk releases with AWS CodePipeline rollbacks. Oftentimes a deployment will appear successful, but regressions in the code, or bugs in a new feature, may surface only when users interact with the application. To mitigate this risk, we configured a CloudWatch alarm to alert when error rates exceeded 3% of overall traffic.

Figure 6: Amazon CloudWatch alarm definition

We then added an OnSuccess condition to the DeployToStage stage with a rollback rule that tracks the CloudWatch alarm configured for the above error rate metric. A wait time was configured in the rule to allow time for CloudWatch to detect any increase in errors related to the new deployment.

Figure 7: AWS CodePipeline condition rule using Amazon CloudWatch

Scenario 3 – “Entry” condition

Finally, we added a third condition as an entry condition to the DeployToProduction stage. We added an AWS DeploymentWindow rule provider with a cron expression to fail the condition if a deployment to production was executed outside of our defined window.

Figure 8: Cron rule for AWS CodePipeline stage conditions

Testing Stage Conditions

Testing Scenario 1

To test our CodePipeline with these stage-level conditions, we executed a git push to the source repository, which triggered a new pipeline execution. Our build stage successfully built a Docker image and pushed that image to the ECR repository, triggering the OnSuccess condition to check for critical vulnerabilities. The Lambda function reported that the scan exceeded our vulnerability threshold and stopped our pipeline with a reason of “Critical Findings Detected”

Figure 9: Amazon ECR scan condition failure

Navigating to the Amazon Elastic Container Registry service console, we were able to identify the critical vulnerability.

Figure 10: Amazon ECR vulnerability report

By reading the CVE we learned that the finding was related to a system package present in the source image defined in our Dockerfile. To remediate this issue, we added a new line to the Dockerfile in our source repository. The full Dockerfile is shown below for example with the change highlighted.

FROM public.ecr.aws/docker/library/python:3.12 WORKDIR /qchess

COPY . /qchess/

RUN apt-get remove --purge git git-man -y

RUN cd /qchess && pip install -r requirements.txt

RUN apt update && apt upgrade -y CMD ["flask","run","--host=0.0.0.0","--port=80"]With this change, we ensured that our image’s system packages would be upgraded on every build along with the version of the vulnerable package discovered by our condition check. We committed and pushed to our source repository to trigger a new pipeline execution.

Testing Scenario 2

During the next run, our Pipeline made it to the DeployToStage stage and successfully deployed our code changes to our staging environment. However, when the OnSuccess condition was executed, it detected that the error rate alarm had transitioned to alarm status, resulting in the stage condition to issue a rollback. We had a bug in our new feature!

Figure 11: CloudWatch Rule triggering a stage rollback

We reviewed the CloudWatch alarm and noticed the elevated error rates related to the deployment. The error rates returned to normal after the stage completed its rollback.

Figure 12: Amazon CloudWatch alarm details

After this rollback we reviewed our code changes and discovered a bug in the code. We made the appropriate changes and pushed to our main branch.

Testing Scenario 3

In the final pipeline execution, the pipeline made it past our DeployToStage condition and entered the entry condition in our DeployToProduction stage. This condition verified that the deployment was being executed within the defined deployment window and successfully deployed our change to production.

Figure 13: Deployment window allowed by stage condition rule

Clean up

If you followed along you should remove any resources you created related to this scenario. These resources include:

- ECS Services

- ECR Repository

- CodeBuild Project

- CodePipeline Pipeline

- Lambda Function

- CloudWatch Alarm

- Associated IAM roles

Conclusion

Adding conditions to your AWS CodePipeline pipelines allows you to have fine-grained control over your pipeline. We have seen how you can safely automate deployments based on variables relevant to your organization. To learn more about CodePipeline conditions, visit How do stage conditions work.

Further reading

- Ensuring rollback safety during deployments

- Implement automatic rollbacks for failed deployments

- My CI/CD pipeline is my release captain: Easy and automatic rollbacks

- Automating safe, hands-off deployments

About the Authors: