Best practices working with self-hosted GitHub Action runners at scale on AWS

June 26, 2024Overview

GitHub Actions is a continuous integration and continuous deployment platform that enables the automation of build, test and deployment activities for your workload. GitHub Self-Hosted Runners provide a flexible and customizable option to run your GitHub Action pipelines. These runners allow you to run your builds on your own infrastructure, giving you control over the environment in which your code is built, tested, and deployed. This reduces your security risk and costs, and gives you the ability to use specific tools and technologies that may not be available in GitHub hosted runners. In this blog, I explore security, performance and cost best practices to take into consideration when deploying GitHub Self-Hosted Runners to your AWS environment.

Best Practices

Understand your security responsibilities

GitHub Self-hosted runners, by design, execute code defined within a GitHub repository, either through the workflow scripts or through the repository build process. You must understand that the security of your AWS runner execution environments are dependent upon the security of your GitHub implementation. Whilst a complete overview of GitHub security is outside the scope of this blog, I recommended that before you begin integrating your GitHub environment with your AWS environment, you review and understand at least the following GitHub security configurations.

- Federate your GitHub users, and manage the lifecycle of identities through a directory.

- Limit administrative privileges of GitHub repositories, and restrict who is able to administer permissions, write to repositories, modify repository configurations or install GitHub Apps.

- Limit control over GitHub Actions runner registration and group settings

- Limit control over GitHub workflows, and follow GitHub’s recommendations on using third-party actions

- Do not allow public repositories access to self-hosted runners

Reduce security risk with short-lived AWS credentials

Make use of short-lived credentials wherever you can. They expire by default within 1 hour, and you do not need to rotate or explicitly revoke them. Short lived credentials are created by the AWS Security Token Service (STS). If you use federation to access your AWS account, assume roles, or use Amazon EC2 instance profiles and Amazon ECS task roles, you are using STS already!

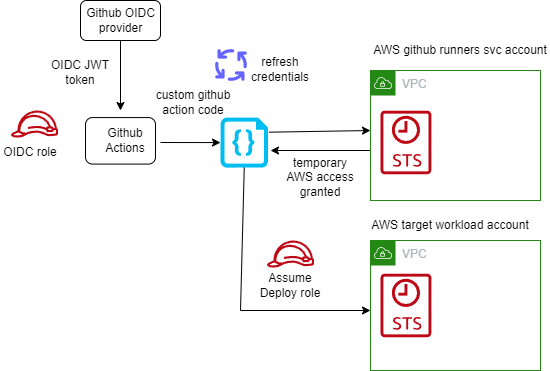

In almost all cases, you do not need long-lived AWS Identity and Access Management (IAM) credentials (access keys) even for services that do not “run” on AWS – you can extend IAM roles to workloads outside of AWS without requiring you to manage long-term credentials. With GitHub Actions, we suggest you use OpenID Connect (OIDC). OIDC is a decentralized authentication protocol that is natively supported by STS using sts:AssumeRoleWithWebIdentity, GitHub and many other providers. With OIDC, you can create least-privilege IAM roles tied to individual GitHub repositories and their respective actions. GitHub Actions exposes an OIDC provider to each action run that you can utilize for this purpose.

Short lived AWS credentials with GitHub self-hosted runners

If you have many repositories that you wish to grant an individual role to, you may run into a hard limit of the number of IAM roles in a single account. While I advocate solving this problem with a multi-account strategy, you can alternatively scale this approach by:

- using attribute based access control (ABAC) to match claims in the GitHub token (such as repository name, branch, or team) to the AWS resource tags.

- using role based access control (RBAC) by logically grouping the repositories in GitHub into Teams or applications to create fewer subset of roles.

- use an identity broker pattern to vend credentials dynamically based on the identity provided to the GitHub workflow.

Use Ephemeral Runners

Configure your GitHub Action runners to run in “ephemeral” mode, which creates (and destroys) individual short-lived compute environments per job on demand. The short environment lifespan and per-build isolation reduces the risk of data leakage , even in multi-tenanted continuous integration environments, as each build job remains isolated from others on the underlying host.

As each job runs on a new environment created on demand, there is no need for a job to wait for an idle runner, simplifying auto-scaling. With the ability to scale runners on demand, you do not need to worry about turning build infrastructure off when it is not needed (for example out of office hours), giving you a cost-efficient setup. To optimize the setup further, consider allowing developers to tag workflows with instance type tags and launch specific instance types that are optimal for respective workflows.

There are a few considerations to take into account when using ephemeral runners:

- A job will remain queued until the runner EC2 instance has launched and is ready. This can take up to 2 minutes to complete. To speed up this process, consider using an optimized AMI with all prerequisites installed.

- Since each job is launched on a fresh runner, utilizing caching on the runner is not possible. For example, Docker images and libraries will always be pulled from source.

Use Runner Groups to isolate your runners based on security requirements

By using ephemeral runners in a single GitHub runner group, you are creating a pool of resources in the same AWS account that are used by all repositories sharing this runner group. Your organizational security requirements may dictate that your execution environments must be isolated further, such as by repository or by environment (such as dev, test, prod).

Runner groups allow you to define the runners that will execute your workflows on a repository-by-repository basis. Creating multiple runner groups not only allow you to provide different types of compute environments, but allow you to place your workflow executions in locations within AWS that are isolated from each other. For example, you may choose to locate your development workflows in one runner group and test workflows in another, with each ephemeral runner group being deployed to a different AWS account.

Runners by definition execute code on behalf of your GitHub users. At a minimum, I recommend that your ephemeral runner groups are contained within their own AWS account and that this AWS account has minimal access to other organizational resources. When access to organizational resources is required, this can be given on a repository-by-repository basis through IAM role assumption with OIDC, and these roles can be given least-privilege access to the resources they require.

Optimize runner start up time using Amazon EC2 warm-pools

Ephemeral runners provide strong build isolation, simplicity and security. Since the runners are launched on demand, the job will be required to wait for the runner to launch and register itself with GitHub. While this usually happens in under 2 minutes, this wait time might not be acceptable in some scenarios.

We can use a warm pool of pre-registered ephemeral runners to reduce the wait time. These runners will listen to the incoming GitHub workflow events actively and as soon as an incoming workflow event is queued, it is picked up readily by the warm pool of registered EC2 runners.

While there can be multiple strategies to manage the warm pool, I recommend the following strategy which uses AWS Lambda for scaling up and scaling down the ephemeral runners:

GitHub self-hosted runners warm pool flow

A GitHub workflow event is created on a trigger like push of code in a master repository or a merge of pull request. This event triggers a Lambda function via webhook and Amazon API Gateway endpoint. The Lambda function helps in validating the GitHub workflow event payload and log events for observability & building metrics. It can be used optionally to replenish the warm pool. There are separate backend Lambda functions to launch, scale up and scale down the warm pool of EC2 instances. The EC2 instances or runners are registered with GitHub at the time of launch. The registered runners listens for incoming GitHub work flow events using GitHub’s internal job queue and as soon as workflow events are triggered, its assigned by GitHub to one of the runners in warm pool for job execution. The runner is automatically de-registered once the job completes. A job can be a build, or deploy request as defined in your GitHub workflow.

With warm pool in place, it is expected to help reduce wait time by 70-80%.

Considerations

- Increased complexity as there is a possibility of over provisioning runners. This will depend on how long a runner EC2 instance requires to launch and reach a ready state and how frequently the scale up Lambda is configured to run. For example, if the scale up Lambda runs every 1 minute and the EC2 runner requires 2 minutes to launch, then the scale up Lambda will launch 2 instances. The mitigation is to use Auto scaling groups to manage the EC2 warm pool and desired capacity with predictive scaling policies tying back to incoming GitHub workflow events i.e. build job requests.

- This strategy may have to be revised when supporting Windows or Mac based runners given the spin up times can vary.

Use an optimized AMI to speed up the launch of GitHub self-hosted runners

Amazon Machine Images (AMI) provide a pre-configured, optimized image that can be used to launch the runner EC2 instance. By using AMIs, you will be able to reduce the launch time of a new runner since dependencies and tools are already installed. Consistency across builds is guaranteed due to all instances running the same version of dependencies and tools. Runners will benefit from increased stability and security compliance as images are tested and approved before being distributed for use as runner instances.

When building an AMI for use as a GitHub self-hosted runner the following considerations need to be made:

- Choosing the right OS base image for the builds. This will depend on your tech stack and toolset.

- Install the GitHub runner app as part of the image. Ensure automatic runner updates are enabled to reduce the overhead of managing running versions. In case a specific runner version must be used you can disable automatic runner updates to avoid untested changes. Keep in mind, if disabled, a runner will need to be updated manually within 30 days of a new version becoming available.

- Install build tools and dependencies from trusted sources.

- Ensure runner logs are captured and forwarded to your security information and event management (SIEM) of choice.

- The runner requires internet connectivity to access GitHub. This may require configuring proxy settings on the instance depending on your networking setup.

- Configure any artifact repositories the runner requires. This includes sources and authentication.

- Automate the creation of the AMI using tools such as EC2 Image Builder to achieve consistency.

Use Spot instances to save costs

The cost associated with scaling up the runners as well as maintaining a hot pool can be minimized using Spot Instances, which can result in savings up to 90% compared to On-Demand prices. However, there could be requirements where we can have longer running builds or batch jobs that cannot tolerate the spot instance terminating on 2 minutes notice. So, having a mixed pool of instances will be a good option where such jobs should be routed to on-demand EC2 instances and the rest on the Spot instances to cater for diverse build needs. This can be done by assigning labels to the runner during launch /registration. In that case, the on-demand instances will be launched and we can a savings plan in place to get cost benefits.

Record runner metrics using Amazon CloudWatch for Observability

It is vital for the observability of the overall platform to generate metrics for the EC2 based GitHub self-hosted runners. Examples of the GitHub runners metrics can be: the number of GitHub workflow events queued or completed in a minute, or number of EC2 runners up and available in the warm pool etc.

We can log the triggered workflow events and runner logs in Amazon CloudWatch and then use CloudWatch embedded metrics to collect metrics such as number of workflow events queued, in progress and completed. Using elements like “started_at” and “completed_at” timings which are part of workflow event payload we can calculate build wait time.

As an example, below is the sample incoming GitHub workflow event logged in Amazon Cloud Watch Logs

{ "hostname": "xxx.xxx.xxx.xxx", "requestId": "aafddsd55-fgcf555", "date": "2022-10-11T05:50:35.816Z", "logLevel": "info", "logLevelId": 3, "filePath": "index.js", "fullFilePath": "/var/task/index.js", "fileName": "index.js", "lineNumber": 83889, "columnNumber": 12, "isConstructor": false, "functionName": "handle", "argumentsArray": [ "Processing Github event", "{\"event\":\"workflow_job\",\"repository\":\"testorg-poc/github-actions-test-repo\",\"action\":\"queued\",\"name\":\"jobname-buildanddeploy\",\"status\":\"queued\",\"started_at\":\"2022-10-11T05:50:33Z\",\"completed_at\":null,\"conclusion\":null}" ]

}In order to use the logged elements of above log into metrics by capturing \”status\”:\”queued\”,\”repository\”:\”testorg-poc/github-actions-test-repo\c, \”name\”:\”jobname-buildanddeploy\” ,and workflow \”event\” , one can build embedded metrics in the application code or AWS metrics Lambda using any of the cloud watch metrics client library Creating logs in embedded metric format using the client libraries – Amazon CloudWatch based on the language of your choice listed.

Essentially what one of those libraries will do under the hood is map elements from Log event into dimension fields so cloud watch can then read and generate a metric using that.

console.log( JSON.stringify({ message: '[Embedded Metric]', // Identifier for metric logs in CW logs build_event_metric: 1, // Metric Name and value status: `${status}`, // Dimension name and value eventName: `${eventName}`, repository: `${repository}`, name: `${name}`, _aws: { Timestamp: Date.now(), CloudWatchMetrics: [{ Namespace: `demo_2`, Dimensions: [ ['status', 'eventName', 'repository', 'name'] ], Metrics: [{ Name: 'build_event_metric', Unit: 'Count', }, ], }, ], }, })

);A sample architecture:

Consumption of GitHub webhook events

The cloud watch metrics can be published to your dashboards or forwarded to any external tool based on requirements. Once we have metrics, CloudWatch alarms and notifications can be configured to manage pool exhaustion.

Conclusion

In this blog post, we outlined several best practices covering security, scalability and cost efficiency when using GitHub Actions with EC2 self-hosted runners on AWS. We covered how using short-lived credentials combined with ephemeral runners will reduce security and build contamination risks. We also showed how runners can be optimized for faster startup and job execution AMIs and warm EC2 pools. Last but not least, cost efficiencies can be maximized by using Spot instances for runners in the right scenarios.

Resources:

- Use IAM roles to connect GitHub Actions to actions in AWS

- Integrating with GitHub Actions – CI/CD pipeline to deploy a Web App to Amazon EC2

- Creating logs in embedded metric format using the client libraries – Amazon CloudWatch

- GitHub – awslabs/aws-embedded-metrics-node: Amazon CloudWatch Embedded Metric Format Client Library

- GitHub – philips-labs/terraform-aws-github-runner: Terraform module for scalable GitHub action runners on AWS