Canary Testing with AWS App Mesh and Tekton

October 13, 2022Planning a release from product strategy to product delivery is a complex process with multiple interconnected services and workflows. On top of that, creating and deploying new versions of services into production has always been a challenge for developers. DevOps teams must choose an effective deployment strategy to enable Continuous Integration and Continuous Delivery (CI/CD) pipelines in their release cycle.

There are many options available today and the best option will depend on numerous factors including impact on end users and the use case. Some of the common application deployment strategies used in DevOps methodology are:

- Rolling deployment: A deployment strategy that slowly replaces previous versions of an application with new versions of an application by completely replacing the infrastructure on which the application is running.

- Blue/Green deployment: A deployment strategy in which you create two separate, but identical environments. One environment (blue) is running the current application version and one environment (green) is running the new application version.

- A/B testing deployment: This consists of routing a subset of users to a new functionality under specific conditions to compare the two versions to figure out which performs better.

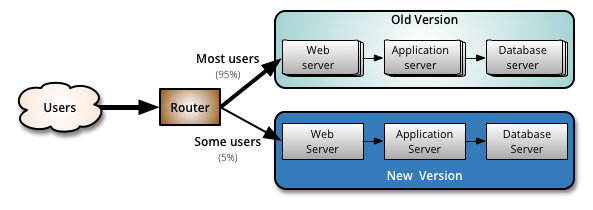

- Canary deployment: This helps to improve the process of deploying new software to production by sending traffic in a phased manner to a subset/percentage of users to minimize the potential risk.

In this blog post, a preview of our talk in the AWS booth at KubeCon + CloudNativeCon 2022 in Detroit, we will be focusing on Canary deployment. A Canary deployment is a deployment strategy that releases a new software version in production by slowly rolling out the change to a small subset of users. As you gain confidence in the deployment, the update is rolled out to the rest of the users. Canary deployments ensures flexibility for developers to compare different service versions side by side to give them confidence to deploy and test. If something goes wrong, you can do easy rollbacks to the previous version with no downtime.

(Image: https://martinfowler.com/bliki/CanaryRelease.html)

By combining a Canary deployment strategy with a service mesh, you can easily configure the traffic flows between your services and achieve the control needed to deploy Canary services. In this blog post we will use AWS App Mesh which is an AWS managed service mesh that provides application-level networking to make it easy for your services to communicate with each other across multiple types of compute infrastructure. App Mesh can help you manage microservices-based environments by facilitating application-level traffic management, providing consistent observability tooling, and enabling enhanced security configurations. App Mesh provides controls to configure and standardize traffic flows between the services. This enables you to manage the Canary deployment using App Mesh configurations where you can start sending only a small part of the traffic to the new version. If all goes well, you can continue to shift more traffic to the new version until it is serving 100% of traffic, while providing the ability to rollback to the original state if there is undesirable behavior or errors.

It is easy to self-manage the configuration in AWS App Mesh for Canary deployments if there are just few services. But as we grow our service mesh with more services, this becomes unmanageable. This is where the open source Tekton framework for building CI/CD pipelines becomes beneficial. Paired with Helm charts, Tekton helps manage and scale canary deployments on Kubernetes. Tekton is an open source project which allows you to build cloud-native CI/CD systems on top of Kubernetes. It consists of Tekton Pipelines, which provides the building blocks, as well as supporting components such as Tekton CLI and Tekton Catalog, that makes Tekton a complete solution. Tekton installs and runs as an extension on a Kubernetes cluster and contains a set of Kubernetes Custom Resources that define the building blocks for your CI/CD Pipelines. In this blog post, we will use a Helm template for App Mesh configuration to simplify the deployment process for canary releases and integrate the Helm template repo into Tekton pipelines for continuous delivery. We will also see how we can use separate Tekton pipelines based on personas to keep the separation of duties for developement and operations team thereby enabling team productivity and velocity.

In this blog we will demonstrate:

- How to combine AWS App Mesh with the cloud native CI/CD tool Tekton in order to release new application versions using the Canary deployment strategy.

- Using these two (Tekton and Canary) powerful technologies to build a persona-based Tekton CI/CD pipeline for both the operations team and the development team.

- How developers can build the new version of an application from their Git Repository and deploy the application image to Container Registry using a Tekton pipeline.

- How the operations team can deploy this new version of the application to Amazon Elastic Kubernetes Service (Amazon EKS) and perform canary deployments of this new version by switching the traffic by percentage increments in an App Mesh configuration. This is done just by making use of a single configuration file without dealing with low-level Kubernetes resources such as Custom Resource Definitions (CRDs).

Solution Design

For this blog post, we will use a simple web application which has a frontend microservice called frontend and a backend service called catalogdetail. In order to run this application, we are going to first deploy them on a fresh Amazon EKS cluster. Beside the application workloads, this cluster hosts the following components:

- AWS App Mesh

This is an AWS managed service mesh which controls the traffic splitting between multiple versions of our backend microservice. App Mesh consists of many low-level Custom Resource Definitions (CRDs) which can be quite complex to manage at scale. Our solution uses a Tekton based CI/CD pipeline and a Helm repository to abstract most of the complexity. - Tekton Environment

Tekton acts as our CI/CD platform for this blog post. It contains two pipelines, one is responsible to build and push our backend microservice into the Amazon Elastic Container Registry and other controls the deployment of new versions making use of AWS App Mesh and a custom designed Helm chart. We will deploy the Tekton related controller and CRDs with the Tekton Operator.

We also have the following components outside our Amazon EKS cluster:

- aws-tekton-canary-testing-app GitHub Repository

This repository will be hosted on GitHub and contains the application source code for our backend microservicecatalogdetail. It is connected to the build CI/CD pipeline over the webhook integration from GitHub. This repository will be used by the developer (see the diagram) to push the code changes to the backend microservicecatalogdetail. - aws-tekton-canary-testing-deploy GitHub Repository

This repository will be hosted on GitHub as well and contains our custom Helm chart, which allows the operator (see the diagram) to control the Canary deployment of our backend microservicecatalogdetail. This repository is also connected to the CI/CD pipeline over the GitHub provided webhook integration - Amazon Elastic Container Registry (Amazon ECR) Image Repository

Amazon ECR contains the container images of our backend microservicecatalogdetail. New image versions built by the Tekton CI/CD pipeline will be uploaded to this repository.

So far, we have described the main components of our solution design and now we will talk about how to release a new version of our backend microservice catalogdetail with a two step solution approach. You can refer to the diagram to understand these flows.

- (Developer) Develop and build a new version of the backend microservice

(Step 1): The developer pushes the source code of the new version of backend microservicecatalogdetailto theaws-tekton-canary-testing-apprepository on GitHub and applies the corresponding Git tags. (Step 2): As soon as the code is pushed into GitHub, the webhook triggers theapps-buildTekton pipeline. (Step 3): This pipelines clones the source code from the GitHub repository, (Step 4): builds a Docker image and uploads it into Amazon ECR. - (Operator) Adjusts the Canary config and deploys the new version of the backend microservice

The Operator interacts with theaws-tekton-canary-testing-deployrepository in order to control the release of the new backendcatalogdetailmicroservice version. Using a simple Helm chart stored in the GitHub repository, the Operator is able to control what percentage of the overall traffic should be directed to each version. The Helm chart abstracts the complexity of the App Mesh configuration and App Mesh CRDs. For example, the Operator can specify that only 20% of the traffic should flow to the new released version and 80% of the traffic should flow to the older version. The following extract of the values.yaml file which is stored in theaws-tekton-canary-testing-deployrepo shows an example of a Canary configuration. The tag value points to a corresponding image tag in Amazon ECR. (Step 5): When the Operator updates the Canary config, which is part of the Helm chart, and pushes the changes to GitHub, (Step 6): a webhook sent to the Amazon EKS cluster will execute the

(Step 5): When the Operator updates the Canary config, which is part of the Helm chart, and pushes the changes to GitHub, (Step 6): a webhook sent to the Amazon EKS cluster will execute the apps-deployCI/CD pipeline. (Step 7): This pipeline is going to clone the Helm Chart stored within the GitHub repository. (Step 8): After this, the pipeline will update the App Mesh CRDs by deploying the Helm chart (if the Helm chart is already deployed, it will simply update it). - (End-User) Observe the traffic shifting from the frontend application

(Step 9):The End-User can observe the content of the backend microservicecatalogdetailby simply invoking the URL exposed by the frontend microservicefrontend. After successful deployment, the End-User should see different responses from each version ofcatalogdetailby invoking the frontend application multiple times.

Benefits of this approach

Each persona can have a separate Git repository and manage the CI/CD pipeline independently. For example, the frontend team can have their own pipeline to build and push an application image, the backend team can have one, and operators can have one to manage the deployment of the applications. Tekton gives us the flexibility to use different pipelines and associate it with the respective Git repository. You can also expand this even further to different environments as needed.

As the amount of services in the App Mesh grows, there is always a challenge to update App Mesh resources when any of the services need to change either for traffic shifting, service discovery, encrypting traffic, etc. There is no tooling yet in App Mesh to automate these and it increases complexity when you need to perform a Canary deployment with App Mesh by managing Kubernetes CRDs manually. The approach used in this solution packages all the App Mesh resources like virtualservice, virtualnode, virtualrouter for backend service into a Helm template and automates the helm deployment using a Tekton pipeline.

Using the approach in this blog, you can perform a Canary deployment just by updating one file (Values.yaml) of the Helm template that has the canaryConfig values for backend version and percentage of traffic diversion. The Tekton pipline webhook listens to this code change, triggers the deployment of the new version, and starts the Canary deployment of this new version.

Walkthrough

This section covers how to release a new version of an application using the Canary deployment strategy and the solution previously outlined.

Prerequisites

This blog assumes that you have the following setup already done:

- An existing Amazon EKS Cluster with a configured node group.

- A personal GitHub Account in order to fork repositories. You can create a GitHub Account for free here.

- Tools installed on a machine with access to the AWS and Kubernetes API Server. This could be your workstation or a Cloud9 Instance / Bastion Host. The specific tools required are:

- The AWS CLI

- The eksctl utility used for creating and managing Kubernetes clusters on Amazon EKS

- The kubectl utility used for communicating with the Kubernetes cluster API server

- The Helm CLI used for installing Helm Charts

- The GitHub CLI used to modify the webhook configuration

Step 1 – Set up demo environment

- Open the links of the two repositories in your favorite web browser and click the Fork button in order to fork them to your personal GitHub account:a. aws-tekton-canary-testing-app

b. aws-tekton-canary-testing-deploy - Authenticate in the GitHub CLI (gh) with your own GitHub account (where you forked the repositories into). Follow the interactive steps by running the following command:

$ gh auth login - Clone the aws-tekton-canary-testing-prerequisites repository:

$ git clone https://github.com/aws-samples/aws-tekton-canary-testing-prerequisistes $ cd aws-tekton-canary-testing-prerequisite - Open install.sh with your favorite text editor and set the following environment variables:

#Your AWS Account ID export AWS_ACCOUNT_ID="" #Your AWS region (e.g. eu-central-1) export AWS_REGION="" #Name of your EKS Cluster export EKS_CLUSTER_NAME="" # Github ORG name (your github username) export GITHUB_ORG_NAME="" # Name of the forked aws-tekton-canary-testing-app repository export GITHUB_APP_REPO_NAME="" # Name of the forked aws-tekton-canary-testing-deployment repository export GITHUB_DEPLOYMENT_REPO_NAME="" - After updating these environment variables, save the file and install the script with the following commands:

$ chmod u+x install.sh $ ./install.sh

The script will install the following resources into your AWS account and EKS cluster:

GitHub Account

Set up a webhook configuration for each forked repository

AWS Account

- Create 2 Amazon ECR repositories to store the container images for the frontend and backend microservices

- Add an OIDC provider to the Amazon EKS cluster in order to enable IRSA

- Add required IAM policies for IRSA enabled Kubernetes service accounts

Kubernetes Resources (deployed within the Amazon EKS Cluster)

- App Mesh Controller to manage Amazon App Mesh over Custom Resource Definitions CRDs

- Frontend Microservice Deployment

- Backend Microservice Deployment

- Tekton Operator to install Tekton infra componentes such as pipelines, trigger and dashboard

- Amazon EKS CSI Controller to create Amazon Elastic Block Store (Amazon EBS) baked Persistent Volumes

- Tekton Resources for the CI/CD build pipeline

- Tekton Resources for the CI/CD deploy pipeline

- Various Kubernetes service accounts used by the components deployed

The script takes approximately 10-15 minutes to install the components mentioned above (depending on your internet connectivity). Please keep your terminal open until everything is installed and the output section is displayed.

After the successful installation of the environment through the script, you should receive the following three URLs as output:

- FRONTEND_ALB_ENDPOINT: This URL lets you reach the frontend of our web application. In order to verify, please paste the URL into your favorite web browser. You should see a simple frontend application which connects to only one version of the backend application (please verify through refreshing the browser window multiple times)

- TEKTON_APP_PIPELINE_ENDPOINT: This is the endpoint URL for the Tekton Image Build pipeline. We are going to use this URL in order to update the Webhook of the aws-tekton-canary-testing-app repository in a subsequent step.

- TEKTON_DEPLOY_PIPELINE_ENDPOINT: This is the endpoint URL for the Tekton Canary Deploy pipeline. We are going to use this URL in order to update the Webhook of the aws-tekton-canary-testing-deploy repository in a subsequent step.

After the successful execution of the steps outlined previously, you should have your environment configured. Currently, the frontend service connects to one version of the backend microservice. We are going to change this situation by releasing a new version of the app in the steps below.

Step 2 – Developer Pipeline

In order to release a new version of our backend application, we have to make the required changes to the codebase, tag the new version, push it to our upstream GitHub repo and finally let Tekton build a new container image which will be pushed to the Amazon Elastic Container Registry (ECR).

- Open the recently cloned aws-tekton-canary-testing-app repository in your favorite text editor or IDE.

- Make the following changes to the file app.js:

a. Line 13: Change the version of the product api from 1 to 2

b. Line14: Add one more additional vendor to the vendors array (e..g SWITZERLAND.com)

- Save the file, commit your changes and push them to the upstream GitHub repository with the following commands:

git add -A && git commit -m "release v2.0.0 of product api" git push git tag v2.0.0 git push origin v2.0.0Please note that we have also created a git tag in order to mark the release of the new product api version.

- The configuration of your GitHub repository is going to send a Webhook to the Tekton Event Listener which triggers the Tekton base CI/CD pipeline in order to build a container image and upload it to Amazon ECR. Please login to your AWS Account and verify the uploaded image within Amazon ECR (Elastic Container Registry → Repositories → catalog-backend)

Step 3 – Operator Pipeline

Once the new version of our application is ready for deployment, we can make use of the second GitHub repository called aws-tekton-canary-testing-deploy. This repository contains a Helm chart which allows the operator to control the traffic distribution which flows to each version of the application. Without this Helm repository, an operator would need to deploy and modify AWS App Mesh resources directly (over Kubernetes CRDs). For people new to AWS App Mesh, this can add unnecessary complexity. The following walkthrough explains how to release our previously built version of our product api and how to shift traffic equally between the new and old versions.

- Open the recently cloned aws-tekton-canary-testing-deploy repository in your favorite text editor or IDE.

- Make the following changes to the file values.yaml:a. Line 19: Reduce the weight which specifies how much traffic will be shifted to version one from 100 to 50

b. End of file: Add the Canary configuration for v2 of our backend api - Save the file, commit your changes and push them to the upstream GitHub repository with the following commands:

git add -A && git commit -m "deploy v2.0.0 of product api" git push - The configuration of your GitHub repository is going to send a Webhook to the Tekton Event Listener which triggers the Tekton based CI/CD pipeline in order to deploy the related AWS App Mesh resources to configure the incoming traffic to split equally between the old and new version of backend service. Open the FRONTEND_ALB_ENDPOINT in your favorite web browser and refresh the page multiple times. You should see that approximately every second refresh displays the second version of your application.

Feel free to release additional versions of your application and play with the weight distribution of the Canary config. In addition to testing new versions and gradually sending new traffic to them, the Canary config is also great for rollbacks. Whenever you observe that a certain version isn’t performing as expected, just remove the version from the Canary config and the associated Tekton pipeline will automatically clean up the associated resources (e..g App Mesh resources and deployments).

Cleanup

Please follow the instructions provided in the README within the aws-tekton-canary-testing-prerequisites GitHub repository in order to clean up any provisioned resources.

Conclusion

After explaining the key concepts behind the canary testing deployment strategy and the complexity of AWS App Mesh configuration for new users, we introduced how you can automate these changes and add some abstraction through popular open source tools such as Tekton and Helm. We also showed you our solution in action by releasing a new version of a simple demo application.

With the foundation explained in this blog post, we are curious to see how you extend the solution and build a more sophisticated release process. Aside from making the changes manually, there is also the possibility to fully automate the deployment and rollback of new version through the observation of one or more key application metrics. Common metrics could be response latency or error rates observed from your application version. After collecting these metrics, you could build a custom application which automatically performs rollbacks and traffic shifting.