Fault-isolated, zonal deployments with AWS CodeDeploy

June 23, 2024In this blog post you’ll learn how to use a new feature in AWS CodeDeploy to deploy your application one Availability Zone (AZ) at a time to help increase the operational resilience or your services through improved fault isolation.

Introducing change to a system can be a time of risk. Even the most advanced CI/CD systems with comprehensive testing and phased deployments can still promote a bad change into production. A common approach to reduce this risk is using fractional deployments and closely monitoring critical metrics like availability and latency to gauge a deployment’s success. If the deployment shows signs of failure, the CI/CD system initiates an

This blog post will show you how to leverage CodeDeploy zonal deployments as part of a holistic AZ independent (AZI) architecture strategy, both patterns that many AWS service teams follow. With this feature, you no longer need to distinguish between infrastructure or deployment failures in order to respond to the event. You can use the same observability tools and recovery techniques for both, which allows you to contain the scope of impact to a single AZ and mitigate the impact more quickly and with less complexity. First, let’s define what an AZI architecture is so we can understand how this feature in CodeDeploy supports it.

Availability Zone independence

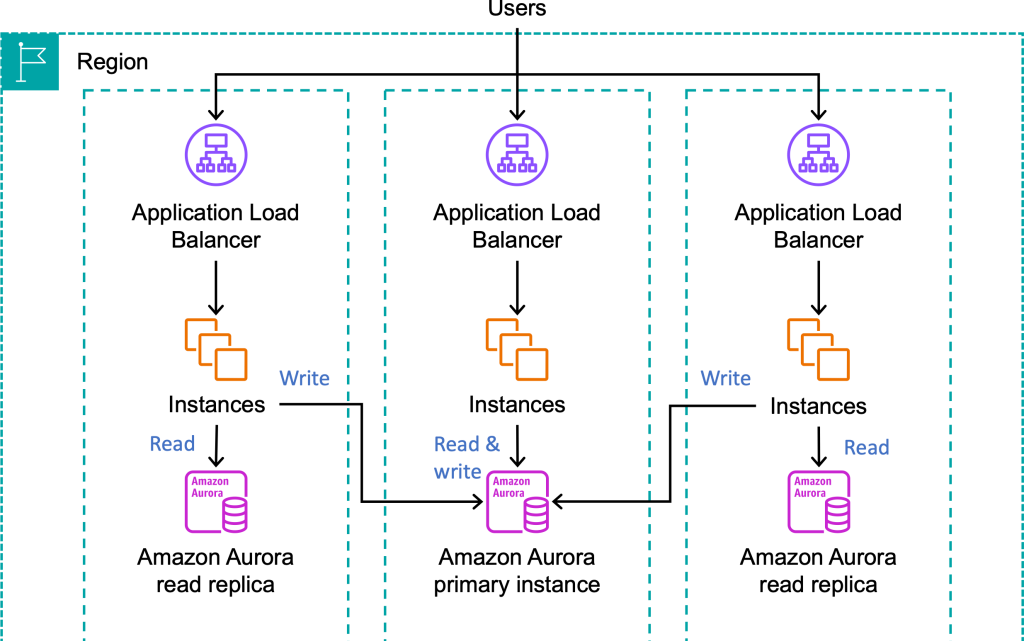

Fault isolation is an architectural pattern that limits the scope of impact of failures by creating independent fault containers that don’t share fate. It also allows you to quickly recover from failures by shifting traffic or resources away from the impaired fault container. AWS provides a number of different fault isolation boundaries, but the ones most people are familiar with are AZs and Regions. When you build multi-AZ architectures, you can design your application to implement AZI that uses the fault boundary provided by AZs to keep the interaction of resources isolated to their respective AZ (to the greatest extent possible).

An Availability Zone independent (AZI) architecture implemented by disabling cross-zone load balancing and using an independent database read replica per AZ. Only database writes have to cross an AZ boundary.

The result is that the impacts from an impairment in one AZ don’t cascade to resources in other AZs, making the operation of your application in one AZ independent from events in the others. You should also monitor the health of each AZ independently, for example by looking at per-AZ load balancer HTTPCode_Target_5XX_Count metrics, or by sending synthetic requests to the load balancer nodes in each AZ and recording availability and latency metrics for each. When an event occurs that impacts your availability or latency in a single AZ, you can use capabilities like Amazon Route 53 Application Recovery Controller zonal shift to shift traffic away from that AZ to quickly mitigate impact, often in single-digit minutes.

Using zonal shift to move traffic away from the AZ experiencing a service impairment

Traditional deployment strategy challenges

During an event, SRE, engineering, or operations teams can spend a lot of time trying to figure out if the source of impact is an infrastructure problem or related to a failed deployment. Then, based on the identified cause, they may take different mitigation actions. Thus, precious time is spent investigating the source of impact and deciding on the appropriate mitigation plan.

When the cause is due to a failed deployment, traditionally rollbacks are used to mitigate the problem. But rollbacks, even when automated, take time to complete. For example, let’s say your deployment batches take 5 minutes to complete, you deploy in 10% batches, and you’re halfway through a deployment to 100 instances when the rollback is initiated. This means it’s going to take at least 25 minutes to finish the rollback (5 batches, each taking 5 minutes to re-deploy to). And it’s entirely possible during that time that instances where the new software was deployed continue to pass health checks, but result in errors being returned to your customers. In the worst case, if all instances had been deployed to, this event could last for almost an hour with customers being impacted during the entire rollback process. In some cases, deployments can’t be rolled back and have to be rolled forward, meaning a new, updated version needs to be deployed to fix the previous deployment. Writing the code for the new deployment and testing it adds to the recovery time of your system and can be error prone.

Additionally, if your unit of deployment includes multiple AZs, then your potential scope of impact from a failed deployment isn’t predictably bounded. For example, if your CodeDeploy deployment groups target Amazon Elastic Compute Cloud (Amazon EC2) instances based on tags or an Amazon EC2 Auto Scaling group that spans multiple AZs, then you could see impact across the whole Region, even if you’re using fractional deployments. There’s not a smaller fault container that helps consistently limit the scope of impact to a predictable size.

Let’s look at how we can overcome these two challenges by using zonal deployments with CodeDeploy.

Zonal deployments with AWS CodeDeploy

One of the best practices we follow at AWS, described in My CI/CD pipeline is my release captain, is performing fractional deployments aligned to intended fault isolation boundaries, like individual hosts, cells, AZs, and Regions. When we release a change, the deployment is separated into waves, which represent fault containers (like Regions) that are deployed to in parallel, and those are further separated into stages. Within a single Region, the deployment starts with a one-box environment, representing a single host, then moves on to fractional batches (like 10% at a time) inside a single AZ, waits for a period of bake time, moves on to the next AZ, and so on until we’ve completed rolling out the change.

Deployment stages aligned to intended fault isolation boundaries within a single deployment wave for one Region

By aligning each stage to an expected fault isolation boundary, we create well-defined fault containers that provide an understood and bounded scope of impact in the case that something goes wrong with a deployment. You can take advantage of this same deployment strategy in your own applications by using zonal deployments in CodeDeploy. To utilize this capability, you need to define a custom deployment configuration shown below.

Creating a zonal deployment configuration that deploys to 10% of the EC2 instances in each AZ at a time, one AZ at a time

This configuration defines a few important properties. First, it enables the zonal configuration, which ensures deployments will be phased one AZ at a time. In this case, updates will be deployed to batches of 10% of the instances in each AZ (see the minimum number of healthy instances per Availability Zone for more details on configuring this setting). Second, it defines a monitor duration, which is the bake time where the effects of the changes are observed before moving on to the next AZ. This ensures sufficient use of the new software to discover any potential bugs or problems before moving on. The value in this example is defined as 900 seconds, or 15 minutes. You should ensure this value is longer than the time it takes for your alarms to trigger. For example, if you are using an M of N alarm for availability and/or latency, that is using 3 data points out of 5 with 1-minute intervals, you need to make sure your bake time is set to greater than 600 seconds, otherwise, you might move on to the next AZ before your alarm has a chance to mark the deployment as unsuccessful. Finally, I’ve also defined a first zone monitor duration. This overrides the “monitor duration” for the first AZ being deployed to. This is useful since the first AZ is acting as our canary or one-box environment and we may want to wait additional time to be really confident the deployment is successful before moving on to the second AZ.

If your service is deployed behind a load balancer with cross-zone load balancing disabled (which is important to achieve AZI), carefully consider your batch size. The load balancer evenly splits traffic across AZs regardless of how many healthy hosts are in each AZ. Ensure your batch size is small enough that the temporary reduction in capacity during each batch doesn’t overwhelm the remaining instances in the same AZ. You can use the CodeDeploy minimum healthy hosts per AZ option to ensure there are enough healthy hosts in the AZ during a deployment batch or Elastic Load Balancing (ELB) target group minimum healthy target count with DNS failover to shift traffic away from the AZ if too few targets are present.

Recovering from a failed zonal deployment.

When a failure occurs, the highest priority is mitigating the impact, not fixing the root cause. While an automated rollback can help achieve both for a failed deployment, using a zonal shift can improve your recovery time. Let’s take a simple dashboard like the following figure. The top graph shows your availability as perceived by customers through using the regional endpoint of your load balancer like https://load-balancer-name-and-id.elb.us-east-1.amazonaws.com. The graphs below it show the measured availability from Amazon CloudWatch Synthetics canaries that test the load balancer endpoints in each AZ using endpoints like https://us-east-1a.load-balancer-name-and-id.elb.us-east-1.amazonaws.com.

Dashboard showing impact in one AZ that affects the availability of the service

We can see that something starts impacting resources in AZ1 at 10:38 causing an availability drop. As we would expect, this impact is also seen by customers, shown in the top graph, but it’s unclear what the underlying cause of the availability drop is. Using the approach described in this post, it turns out that it doesn’t matter. Within a few minutes, at 10:41 the CloudWatch composite alarm monitoring the health of AZ1 transitions to the ALARM state and invokes a Lambda function that reads the alarm’s definition to get the AZ ID and ALB ARN involved, and initiates the zonal shift. It’s important that the alarm logic only reacts when a single AZ is impacted, if there was impact in more than one AZ, we would need to treat this as a Regional issue.

After a failed deployment to AZ1, an automatically initiated zonal shift quickly mitigates the customer impact

Then, after a few more minutes, at 10:44, we can see availability from the customer perspective has gone back up to 100% by shifting traffic away from AZ1.

The impact of the failed deployment is mitigated by shifting traffic away from AZ1

It turns out the cause of impact in this case was a failed deployment, and we can see that our synthetic canaries still see the failure while the deployment is rolling back, but we’ve achieved our primary goal of quickly removing the impact to the customer experience. From the start of impact to mitigation, 6 minutes elapsed, which was significantly faster than waiting for the deployment to completely rollback. After the rollback is complete, at 10:58, 20 minutes after the start of the event, we can see the alarm transition back to the OK state and availability return to normal in AZ1, meaning we can end the zonal shift and return to normal operation.

After the deployment rollback is complete, the availability in AZ1 recovers and the zonal shift can be ended

Conclusion

Performing zonal deployments helps improve the effectiveness of AZI architectures. Aligning your deployments to your intended fault isolation boundaries, in this case AZs, creates a predictable scope of impact and helps prevents cascading failures. This in turn allows you to use a common set of observability and mitigation tools for both single-AZ infrastructure events and failed deployments, which can mitigate the impact faster than automated rollbacks. Additionally, by removing the ambiguity on selecting a recovery strategy for operators, it further reduces recovery time and complexity. Learn more about zonal deployments in AWS CodeDeploy here.