Getting Started with Cilium Service Mesh on Amazon EKS

July 17, 2024Cilium is an open source solution for providing, securing, and observing network connectivity between workloads, powered by the revolutionary kernel technology called extended Berkeley Packet Filter (eBPF). eBPF enables the dynamic insertion of security, visibility, and networking logic into the Linux kernel. Cilium provides high-performance networking, advanced load balancing, transparent encryption, and observability. Cilium was initially created by Isovalent and was donated to the Cloud Native Computing Foundation (CNCF) in 2021. Recently, in October 2023, Cilium became the first project to graduate in the cloud native networking category in CNCF. Graduated projects are considered stable and are used successfully in production environments.

Amazon Elastic Kubernetes Service (Amazon EKS) is a managed Kubernetes service that makes it easy for you to run and manage your Kubernetes clusters on AWS. It is certified Kubernetes-conformant by the CNCF. Organizations rely on Amazon EKS to handle the undifferentiated heavy lifting of deploying and operating Kubernetes at scale. Amazon EKS Anywhere, which is an open source deployment option of Amazon EKS on-premises, comes with Cilium as the default container networking solution.

As application architectures increasingly rely on dozens of microservices, more and more organizations are exploring and evaluating various service mesh capabilities, such as service to service monitoring, logging, and traffic control, some of which Cilium provides. Organizations can benefit from implementing Cilium Service Mesh on Amazon EKS by enhancing network security, improving observability, and streamlining service connectivity across Kubernetes clusters. This integration facilitates secure and efficient service-to-service communication, provides advanced traffic management capabilities, and enables comprehensive monitoring and troubleshooting of network issues. Use cases include multi-cluster networking, secure microservices communication, and network policy enforcement in complex, distributed applications. In this blog we will talk about the Cilium architecture, and demonstrate some of its capabilities on Amazon EKS.

Cilium

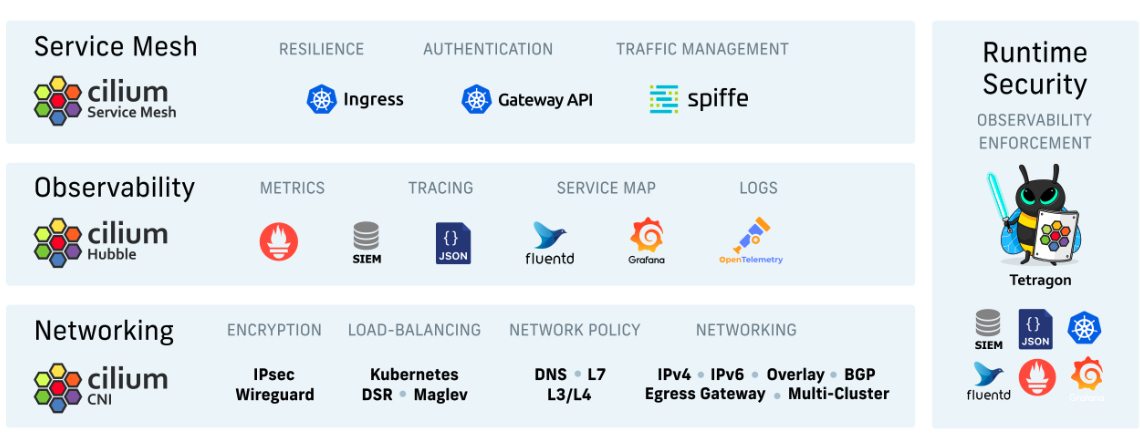

A visual representation of all the capabilities of Cilium is shown here.

Figure 1: Cilium overview (Source: https://github.com/cilium/cilium)

At its core, Cilium architecture is comprised of the Cilium agent, the Cilium operator, the Cilium Container Network Interface (CNI) plugin and Cilium command line interface (CLI) client. The Cilium agent, running on all cluster nodes, configures networking, load balancing, policies, and monitors the Kubernetes API. The Cilium operator centrally manages cluster tasks, handling them collectively rather than per node. The CNI plugin, invoked by Kubernetes during pod scheduling or termination, interacts with the node’s Cilium API to configure necessary data-paths for networking, load balancing, and network policies. The Cilium CLI client is a command-line tool that is installed along with the Cilium agent. It interacts with the API of the Cilium agent running on the same node. The CLI allows inspecting the state and status of the local agent.

Figure 2: Cilium components

Cilium Service Mesh is a service mesh natively built into Cilium. Cilium Service Mesh is built on a sidecar-free data plane powered by Envoy. Cilium Service Mesh is capable of offloading a large portion of service mesh functionality into the kernel and thus requires no sidecar in the Pods which dramatically reduces the overhead and complexity.

Figure 3: Cilium Service Mesh implementation (Source: https://cilium.io/use-cases/service-mesh/)

As shown here, a Cilium agent is deployed per node. This agent programs Layer 4 (TCP, UDP, ICMP), Layer 3 (IP), and Layer 2 (Ethernet) functions, providing load balancing and visibility out of the box — all implemented leveraging eBPF.

Cilium eliminates the sidecar, connecting the socket layer from the application directly through eBPF to the Linux kernel network interface. The Envoy proxy can be deployed as a component within the Cilium agent or as a separate Kubernetes daemonset. According to the tests performed by Isovalent, the eBPF native data-path with the Envoy proxy offers performance benefits.

Cilium Service Mesh security provides a clear segregation between the data-path used for encryption/authentication from the data-path used for Layer 7 processing. This is essential for security as the HTTP processing logic in the proxy is the most vulnerable part of a proxy. Any HTTP vulnerability leads to exposure of SSL certificates if the proxy performs both Layer 7 processing and mTLS.

Wherever possible, Cilium Service Mesh uses eBPF data-plane and for the use cases which are not supported by Cilium’s sidecar-free model yet it leverages Envoy as a proxy (per-node). This figure outlines those use cases.

Figure 4: Cilium features that require a proxy (Source: https://www.cncf.io/projects/cilium)

Cilium Service Mesh provides visibility through Hubble, a fully distributed, open source networking and security observability platform. It is built on top of Cilium and eBPF to enable deep visibility and transparency into the communication and behavior of services and the networking infrastructure.

Figure 5: Hubble architecture (Source: https://cilium.io/use-cases/metrics-export/)

Deployment Architecture

To demonstrate some of the Cilium capabilities mentioned in the previous section, we used an Amazon EKS cluster which spans across two Availability Zones (AZ) in the AWS region as shown here. We deployed Cilium in CNI chaining mode running alongside Amazon Virtual Private Cloud (Amazon VPC) CNI. In this mode Amazon VPC CNI owns the Pod connectivity and IP address assignment while Cilium owns the network policy, load balancing, and encryption. We also used Cilium as the Kubernetes Ingress and for that Cilium requires the kube-proxy replacement feature to be enabled. This Cilium feature replaces the kube-proxy, which comes by default on EKS, with eBPF based implementation. Cilium leverages the Envoy proxy on the nodes to fulfill Kubernetes Ingress. We also enabled Hubble for visualization of the communication and dependency of microservices within the EKS cluster.

Figure 6: Deployment architecture

We leveraged Product Catalog Application as a real-world example. The application is composed of three microservices: Frontend, Product Catalog, and Catalog Detail. By default each microservice is deployed as a single Pod.

Code sample

Please refer to the GitHub repository to test the solution architecture described in the next section.

Note: The code sample is for demonstration purpose only. It should not be used in production environments. Please refer to Cilium Documentation and Amazon EKS Best Practices Guides, especially the Security Best Practices section, to learn how to run production workloads.

Ingress

The way Cilium implements Ingress is not by deploying specific Pod(s) to function as Ingress Gateway(s). Cilium actually turns each node into an Ingress gateway by running an eBPF program on those nodes to be able to accept, process, and forward requests that come on the NodePort to the Envoy proxy on the respective node.

As shown in Figure 7, when a user accesses the application URL, the request first hits the AWS Network Load Balancer and then gets forwarded to one of the EKS worker nodes on the NodePort.

Figure 7: Application architecture and request flow

The request is first processed by the eBPF program running on the node and then by the Envoy proxy on the node. As a result, the request is forwarded to one of the Pods that is part of the frontend service (developed in NodeJS). The frontend service then calls the backend service productcatalog (developed in Python) to get the products and the productcatalog in turn calls catalogdetail (developed in NodeJS) backend service to get vendor information, The manifest to configure Ingress can be found here.

When you try accessing the application URL, Hubble almost immediately is able to provide the service dependency map; as shown here.

Figure 8: Hubble service map graph

Traffic Shifting

Based on Figures 7 and 8, you may have noticed that catalogdetail service has two versions v1 and v2. When the productcatalog service calls the catalogdetail service, Cilium load balances the requests to any of the versions randomly. We wanted to influence that by configuring a traffic shifting policy in Cilium. We configured a CiliumEnvoyConfig Custom Resource Definition (CRD) which allows you to set the configuration of the Envoy proxy. To show this, we set up an Envoy listener which load balances 50% of the requests to v1 and 50% of the requests to the v2 version of the catalogdetail service.

We verified this by using kubectl exec into the productcatalog Pod and generating requests for the catalogdetail service. As shown in this output, out of six requests in total, three of them were forwarded to v1 and the other three were forwarded to v2 of the service.

Considerations

- Cilium requires the Linux kernel version to be at minimum 4.19.57. For more details on Cilium requirements please see the System Requirements section in the Cilium documentation. For this post we used Kubernetes v1.29 and the Amazon Linux 2 Amazon Machine Image (AMI) for the Amazon Elastic Compute Cloud (Amazon EC2) worker nodes which is based on Linux kernel version 5.10.

- Amazon EKS now supports cluster creation without default networking add-ons. If you do not use this feature, you can still remove the kube-proxy add-on from your Amazon EKS cluster after installing Cilium with the kube-proxy replacement enabled, as described in the Cilium documentation.

Conclusion

Cilium provides diverse networking, security, and observability capabilities all in the Linux kernel by leveraging eBPF. Organizations that already use Cilium can expand on its capabilities and build a service mesh layer on their Amazon EKS clusters. In this blog post, we showed you how to deploy Cilium Service Mesh as Kubernetes Ingress and how to leverage it to shift traffic using Envoy based Layer 7 aware traffic policies.