How We Invented Our Own AI Software Engineer

April 10, 2025Hi everyone!

We are super happy to announce a major update – a few days ago we finally introduced our new AI Engineer to Flatlogic Platform.

Long story short – now you can:

- Work in Two Environments:

Dev environemnt for development and instant feedback, and Stable environment for persistent changes. - Version Control:

Track every change through our integrated Git-based versioning system, review full history, and roll back changes. - Use AI Engineer for Application Development:

- Modify your data schema (entities, fields, relationships)

- Adjust source code directly (UI components, logic, styling)

- Update your application data (permissions, roles, content)

- Instant Reflection of Changes:

All updates made via AI are immediately reflected in a live preview – no need to wait for redeployment.

Now a longer version for those who love details!

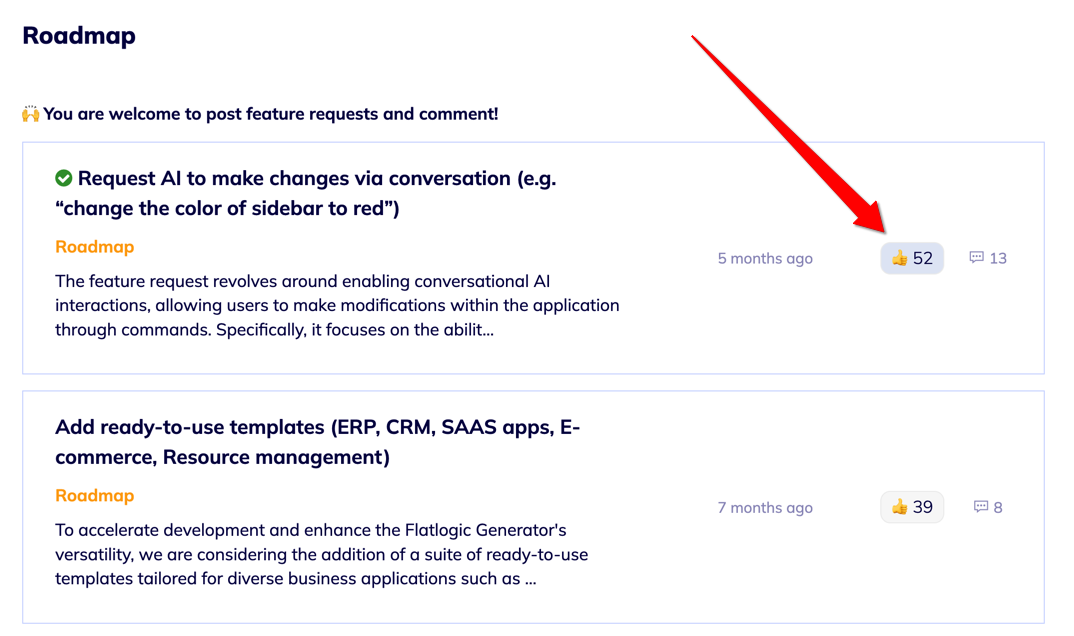

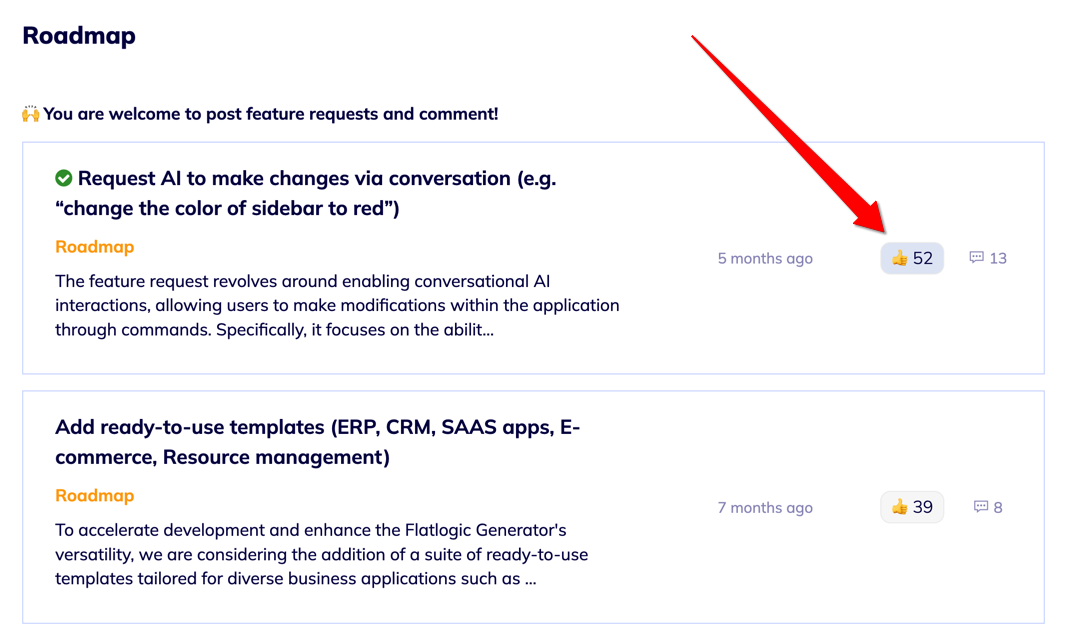

We had a super successful launch on AppSumo last year that proved to us Flatlogic Generator is INDEED needed!

However, it also showed that it was not complete – the most requested feature for Flatlogic Generator was an ability to make changes to the generated apps (obviously – how naive can one be to think one-attempt generation will be enough :).

OK, whatever our customers demand – we do! Accepted!

We naively thought and promised – we can deliver this feature in 6 months. How naive again!

The long story of inventing our own AI Engineer

OK, so I thought to myself – how do we approach “the ability to make changes” from the technical perspective at all? What’s the first step?

- It is localizing the problem, of course! Or, in other words, defining the system boundaries and degrees of freedom!

What did we have? We had a web app represented as a data model, and we could add entities and fields to the data model, then regenerate the app. - That could be done via schema editor only, but AI cannot use UI – we need to extract commands such as “add entity”, “remove entity”, “add field”, etc, so they can be triggered programmatically.

- OK, so AI should be able to execute these “actions”/commands against the app – this became clear! But how do we do that? We have chat, but chat is chat – it is just text, not method calls. So we need to connect AI to our platform and allow it to execute commands via some interface.

- OK, but what about feedback? What if a user change request requires a few command calls?..

This one was tough…

… - Then.

We need.

To build a feedback mechanism!

Then we need to feed back execution results to the LLM, for evaluation, and for coming up with the next step.

…

OMG! It felt like a solution! - So we give LLM a set of commands it is able to execute, feed back execution results, and ask it to perform the next action!

Cool!

… - Wait, but what about code? The app represented as a data model is compiled into the source code, the ultimate representation of the software that also should be able to be modified. This one is easy – we add a few more commands: “read_file”, “modify_file”, “replace_file_line”, etc.

- What if they want to modify roles or permissions? Or data in general? Easy!

More commands: “read_db_schema”, “execute_sql”! - Ah, now I get it – we simply give AI all abilities we have as human operators in our system: modify data model, modify source code, modify data. Nice! Finally something cool and working!

- A teammate sends me a guide by Anthropic, which has already become a classic – https://www.anthropic.com/engineering/building-effective-agents.

…

Mmm, so we also invented “the AI Agent”?

Nice! If respected guys think the same, then we are on the right track! - OK, the core of the future system is ready! But when AI makes changes, the app has to be redeployed, which takes ~5 minutes.

OMG.

Not usable completely.

What do we do?

What human engineers do? - They launch in dev mode! With hot swap, or hot module replacement. Then this is what we do to! We need an environment where the app is launched in dev mode, so changes are seen immediately. Cool!

- But what about the state of the generated app? AI implements changes to the app, then what happens? Do we store them?

OMG.

…

I knew the answer, but I did not like it, because it meant we had to fundamentally adjust the system. And the answer, as you may have guessed, was:

…

GIT! - So we set up our own Git-based version control system. Even set up our own git server to host all user apps. So now you can simply click “Save” and under the hood a new commit will be created, so whatever you have is safe.

- Cool! Users can now create apps, modify them with AI, and store changes. Nice! But all of this is happening in a dev mode! Not production ready again!

- So what do we do?

- Easy – adding another environment that we called “Stable” (I hope we will be brave enough to add Production environment too) where app is launched in a -production mode. Now we talk!

- Ah… Now dealing with environment syncing. Pushing from one. Resetting from another…

- A teammate asks:

– Hey, aren’t things getting too complex? Maybe we need to go back to selling templates?

– No! We already buried $$$k, we cannot stop here! This is not what real men do! - Now! Everything should be OK, right?

No!

…

Our pricing model is outdated, never truly worked, and is not transparent. So what do we do? - We add a credits-based pricing model: subscribe to get $X credits per month, spend credits on AI changes, hosting, and downloading derivative source code.

- Nice. Now it feels at least like a first MVP that we can publish – the foundation is here.

- Of course, there are more questions and things we plan to add very soon:

- A free limited version – yes!

- More improvements to AI Engineer (RAG, recipes, memory) – yes!

- Add more tailored business templates, so you are not starting from a basic app – yes!

- More UI diversity – yes!

- Out-of-the-box OpenAI & Stripe integrations – yes!

- And much more – https://flatlogic.com/forum/threads/category/roadmap

(Of course, if we survive, which you may help with by using and/or spreading the word about our product 🙂

Today we are releasing everything up to item 18 – it took us just a year (haha) to get here!

The credits system, which is a final mark before we may say MVP is completed, is coming soon (next week), so you still have a few more days when you have unlimited credits.

One last thing – The convergence

It is interesting that we effectively observed “the convergence” phenomena when different actors came up with the same solution, not exchanging information between each other: we came to the architecture of “AI Agent” just by trying to solve the “ability to make changes to the generated apps with AI” problem, same as many other actors.

The same applies to the Model Context Protocol, again by Anthropic. It will be super easy for us to support it since we already support it under the hood – we just need to expose commands available to our AI Agent publicly, so they can be used by other AI agents.