Improving API performance at Sonar with Lambda SnapStart and Micronaut

January 27, 2025SonarQube Cloud is a software as a service (SaaS) solution developed by Sonar that provides a comprehensive code analysis platform. It uses advanced static analysis techniques to automatically find and fix code quality issues, security vulnerabilities, and technical debt. They provide support for over 30 programming languages, frameworks, and infrastructure as code (IaC) platforms.

Sonar is committed to the open source community, providing solutions like SonarQube Server and SonarQube Cloud on AWS to help developers ensure code quality and security in their projects. SonarQube Cloud has been built as a managed service on AWS and offers a free plan to developers working on open source projects.

Overview

Sonar’s customers integrate SonarQube Cloud into their software development life cycle (SDLC) and upload their code for analysis. Therefore, it is essential for SonarQube Cloud to be reliable and secure. Sonar uses AWS Lambda for its strong security isolation boundary and capacity to correlate infrastructure consumption with tenant activity. This makes determining the cost impact of each tenant easy. AWS Lambda scales to zero when the system has no traffic for cost efficiency. The tradeoff is that SonarQube Cloud occasionally has cold starts, affecting a small percentage of customers’ user experience.

To improve this situation, Sonar has integrated Amazon API Gateway with AWS Lambda SnapStart. While modernizing SonarQube Cloud’s architecture on AWS, Sonar has implemented Domain-Driven Design principles by decomposing the application into bounded contexts, each owned by independent teams. They also built a unified API to expose these domains publicly and established an API strategy for their microservices.

Sonar uses the Micronaut application framework with Java for the implementation described in this blog. The Micronaut Framework is an open source, modern framework for building microservices and serverless applications. The framework has specific support for AWS Lambda within the Micronaut AWS library. The Micronaut framework was the preferred choice of Sonar engineers due to its strong integration with AWS Lambda and AWS Cloud Development Kit (AWS CDK), as well as its life cycle management features that support application snapshotting.

Before enabling SnapStart on their AWS Lambda function Sonar engineers observed cold start duration times to be between 7 and 8 seconds. Activating the SnapStart feature reduced that cold start to 2.5 seconds. The duration remained unsatisfactory. Sonar onboarded on the journey to enhance developer experience, which is described in this blog. They reduced cold start durations by up to 90% through AWS Lambda SnapStart until reaching values as low as 250 ms.

Recap on how SnapStart works

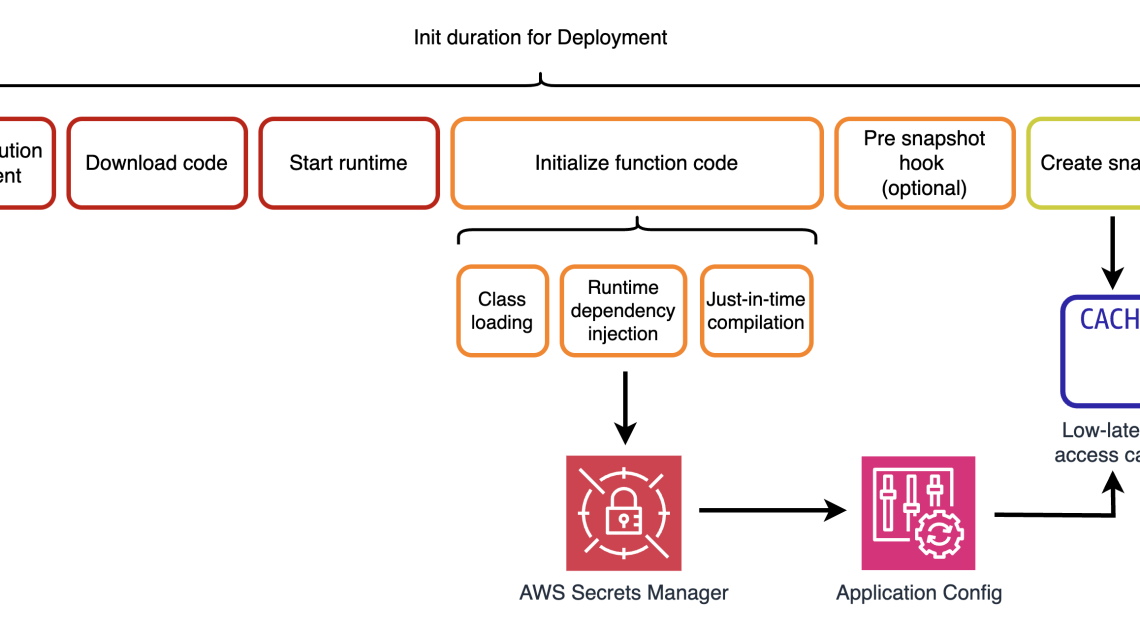

AWS builders and developers have been using AWS Lambda SnapStart for Java applications that require low-latency performance to achieve up to a 10-fold improvement in function startup times. It does not involve additional costs or code modifications. With on-demand functions, the primary cause of latency at start up is the time consumed in the initialization phase, during code loading and external connections establishment. This process is clear during the function’s first execution or invocation.

With SnapStart, AWS Lambda initializes the function code in advance when you publish a function version, rather than waiting for the first invocation. By doing so, AWS Lambda captures an encrypted cached memory and disk state of the initialized execution environment.

With AWS Lambda SnapStart, function invocations resume from a tiered low-latency access cache established during the function version publication, eliminating the need to initialize from scratch.

The focus of this blog post is to explain the implementation work that Sonar engineers executed to reduce AWS Lambda cold starts. Use the following resources to read a detailed explanation:

- Reducing Java cold starts on AWS Lambda functions with SnapStart

- Starting up faster with AWS Lambda SnapStart

- Improving startup performance with Lambda SnapStart

Priming and runtime hooks

Priming addresses one of the primary contributors to initial invocation latency: Just-In-Time (JIT) compilation. By implementing priming strategies, developers can compile as much code as possible in advance, reducing the compilation overhead during the initial request.

SnapStart leverages the concept of priming, which involves preparing the Java application before the snapshot is taken, resulting in optimal performance after the restore operation. By utilizing the pre-snapshot hook, developers can proactively execute non-data-mutating code before the snapshot is taken.

By implementing priming in their AWS Lambda functions, Sonar developers ensure that class dependencies are resolved, allowing Just-In-Time (JIT) compilation to optimize performance.

How SnapStart integrates with Micronaut

The OpenJDK open source Coordinated Restore at Checkpoint (CRaC) project provides runtime hooks that enable you to execute custom code before and after a snapshot is created or restored. AWS Lambda SnapStart supports the CRaC API. The application can execute custom code at specific points during the snapshotting and restoration phases. It leverages the org.crac.Resource interface and its two methods: beforeCheckpoint and afterRestore. The methods allow you to run code immediately prior to taking a snapshot and immediately after restoring a snapshot.

The beforeCheckpoint hook is executed during snapshot at deployment time. An example action that you can perform here is an Amazon Aurora read-only operation or an Amazon DynamoDB getItem. Under the hood, it will establish connections to the data sources, lazy load resources, and perform the time-consuming Just-In-Time compilation on the code. That is why it is advantageous to perform it before taking a snapshot.

Conversely, the afterRestore hook provides an opportunity to restore or re-initialize any unique content after the snapshot has been restored, ensuring a consistent and correct application state upon invocation. The afterRestore method offers an execution entry-point for code that will run only once during the lifetime of your function’s version.

The Micronaut framework offers seamless integration with the CRaC project through the Micronaut CRaC module. By including this module as a dependency in their build, Sonar engineers have taken advantage of the CRaC functionality within their Micronaut-based application. Once the dependency is added, the SnapStart feature in AWS Lambda automatically invokes each registered CRaC resource at the appropriate points during the snapshot lifecycle. Priming will become important in the first step towards reducing cold starts.

Using Micronaut and its support for the CRaC API, Sonar codes any logic that needs to be performed before taking a snapshot.

Improvements in the Init phase

Sonar already reduced cold start times in the code initialization step by implementing AWS Lambda recommendations. By also fetching configurations from the secret management system during the deployment phase, when the snapshot is created, the occurrence of cold starts is significantly reduced. This proactive approach eliminates the need for an additional network call upon receiving a customer request.

Sonar builders are storing the application configuration in the snapshot. The secret is fetched and stored in the snapshot, instead during the Initialization time of a new AWS Lambda environment, improving cold start by approximately ten times.

However, the configuration stored in AWS Secrets Manager can change between different moments of execution and the function will not be aware of the updated value. For such scenarios, SnapStart provides the optional post-snapshot hook:

The next finding in Sonar’s analysis was a warning from the Hikari data source regarding a stale connection. This library handles data sources and connection pools. These require consideration since SnapStart will store the state of the database connection in the snapshot. Restoring the snapshot can render these connections outdated or invalid.

To address this, the Micronaut framework provides a strategy called “pool suspension”: the allow-pool-suspension flag instructs the framework to suspend the connection pool at the moment the SnapStart snapshot is taken. This reduces cold-start time, as the application can suspend connections instead of having to recover from a broken one. By doing this, Sonar ensures that the snapshot doesn’t capture any connections or resources that may become stale or invalid later on. In this scenario, establishing, pausing, and resuming the connection is quicker than employing a post-snapshot hook.

Even more priming

Publishing custom metrics is widely used to measure business and technical performance. Amazon CloudWatch Embedded Metric Format (EMF) enables you to ingest high-cardinality application data (such as requestId) into Amazon CloudWatch as logs and generate actionable metrics. It does this without sacrificing data granularity or richness. It simplifies observability by inserting JSON metrics into structured log events.

For workloads with short-lived resources like AWS Lambda functions, this feature is valuable as it allows you to include custom metrics with detailed log event data in an asynchronous manner. Pushing metrics synchronously with the AWS SDK will result in the function being suspended as it waits for a response.

Sonar engineers have integrated EMF in their AWS Lambda code using the open source client libraries available for Java. After further analysis of the execution duration, they have identified that priming the embedded metrics results in duration reduction.

More is less

After the improvements listed and implemented above, Sonar now has an AWS Lambda function with a first-time execution of approximately 500 ms, down from an initial 2.5 seconds. Sonar developers were still looking for further improvements. AWS Lambda scales automatically and you only pay for what you use. However, choosing the right memory size settings for an AWS Lambda function is still an important task.

Sonar engineers analyzed memory tuning as it also determines the amount of virtual CPUs available for their functions. Adding more memory proportionally increases the amount of CPU. Since CPU-bound AWS Lambda functions see the most benefit when memory increases, the results observed by Sonar in their case were promising from a cost perspective as well:

By increasing the allocated memory from 512 MB to 1769 MB, the function now has the equivalent of one vCPU of computing power. This reduces the first-time execution from around 500 ms to 250 ms. Moreover, the cold start occurrence constitutes only 0.05% of all calls running during a whole month.

When the memory is increased to 1769 MB, the cost grows from $4.24 to $5.88. For 38% increase in cost, the duration is reduced by 60% (per 1 million invocations).

Measuring SnapStart improvements

CloudWatch Logs Insights enables developers to create ad hoc queries to understand Lambda function behavior using stored logs. AWS Lambda provides insights with its automatic logging feature, generating a REPORT log entry for every function invocation. These logs offer a detailed breakdown of the function’s performance, including actual and billed duration, as well as memory utilization. AWS Lambda also captures and logs the Restore Duration for snapshot restores. The following log insight query on AWS Lambda CloudWatch logs can generate an overview of restore statistics:

This query was used to report on the progress of reducing latency throughout this blog.

Summary of SnapStart improvements

After enabling SnapStart, the function’s cold start duration decreased from 7-8 seconds to 2.5 seconds. From that point on, the cold start improvements achieved by Sonar, after implementing all the actions mentioned above, are:

| Average Execution Duration | P99 | |

| Before priming | 2110ms | 2343ms |

| After priming and CRaC | 1218ms | 1354ms |

| After Connection Pool Suspension | 712ms | 848ms |

| After CloudWatch Metrics Priming | 401ms | 541ms |

| After Memory Tuning | 221ms | 276ms |

Conclusion

This blog post provides actionable guidance on reducing cold start executions using AWS Lambda SnapStart. We have explained how SonarSource builders have reduced their AWS Lambda function cold start duration from up to 2500 ms to around 250 ms.

By enabling SnapStart on their AWS Lambda functions, Sonar builders improved the cold start times by 3.5 times. To provide even lower latencies to their customers, Sonar implemented advanced optimizations, starting with priming and continued with performance benchmarking at different memory allocations.

In Sonar’s journey towards building a robust, efficient and scalable microservices architecture, Micronaut has played an important role. Micronaut achieves this through its dedicated CraC module, which offers developers a straightforward way to incorporate state capture at a specific point in time. This advantage aligns well with the principles of open source development by promoting efficiency.

From a business perspective, Sonar achieved a secure and cost-efficient implementation of their latency-sensitive microservices. It aligns with the serverless SaaS strategy to offer a service experience for their customers that focuses on zero downtime and regular development. They have designed an architecture that can continually evolve and rapidly respond to market demands.

The learnings summarized in this blog will be used by Sonar engineers as a blueprint for all their AWS Lambda implementations.

The performance improvements presented in this blog are specific to and reported by the customer and the particular use case analyzed. Your performance results may vary.