Why Bad Bugs in DNS (And Other Open Source Code) Just Won’t Go Away

December 20, 2022Earlier this year, security researchers at Nozomi Networks discovered a DNS vulnerability in two C standard libraries used widely in embedded systems. The bug leaves the libraries vulnerable to cache poisoning – a DNS flaw Dan Kaminsky discovered in 2008.

Paul Vixie, a contributor to the Domain Name System (DNS) and distinguished engineer and VP at AWS Security, underscored this DNS issue during his keynote address at Open Source Summit Europe in Dublin.

Vixie stated that the real fix is Domain Name System Security Extensions (DNSSEC), released in 2010; however, DNSSEC is still not widely deployed enough to solve this problem when it turned up again in embedded systems some 13 years later.

These two libraries are open source software — anyone can inspect them, Vixie concluded. “So, this should be embarrassing that in 2022 there’s still widely used open source software that has this vulnerability in it.”

As an original author and eventual patcher of some of these DNS bugs, Vixie took us on a journey through the history of the early days of the internet to examine how we got to where we are today. He then provided best practices that consumers and producers can do now to help reduce vulnerabilities and mitigate security risks in the future.

How it all started: Vixie takes us back to 1986

Did you know that all devices that use DNS are using a fork of a fork of a fork of 4.3 BSD code from 1986?

It all started when the publishers of the 4.3 Berkeley Standard Distribution (BSD) of UNIX added support for the (then) new DNS protocol in a novel way. Getting a new release out on magnetic tapes and shipping it out in containers was a lot of work. So, they published it as a patch by posting it to Usenet (newsgroups), on an FTP server, and via a mailing list. If users were interested, they could download the patch. And at that time, in 1986, the internet was still small. “This was pre-commercialization, pre-privatization; the whole world was not using the internet,” Vixie said.

When Vixie began working on DNS shortly after the patch was issued, it was considered abandonware – no one was maintaining it. The people at Berkeley who had been working on it had all graduated. However, anyone who had a network device needed DNS. They needed to do DNS lookups, but the names of the APIs that Berkeley published were not standardized. Different embedded systems vendors had their own domain naming conventions, and they copied the 4.3 BSD code and changed it to suit their local engineering considerations.

“Then Linux came along… and right after that, we commercialized the internet,” said Vixie. In other words, things got big! “All of our friends and relations started to get email addresses, and it was wonderful and creepy all at the same time.”

Where things get a little complicated

Once the internet “got big,” every distro had built its own C library and copied some version of the old Berkeley code. “So, they might know that it came from Berkeley and get the latest one, or just copied what some other distro used and made a local version of it that was divorced from the upstream,” said Vixie. “Then we got embedded systems, and now IoT is everywhere, and all the DNS code in all of the billions of devices are running some fork of a fork of a fork of code that Berkeley published in 1986, so DNS is almost never independently reimplemented.”

It’s truly amazing that we are literally standing on the shoulders of giants. And this is just one example.

Vixie takes responsibility

Vixie surprised me and garnered my respect even more by taking responsibility for his past actions. He showed that he was accountable and transparent by stating, “All of those bugs and vulnerabilities that I showed you earlier…all of the bugs that are mentioned in that RFC are bugs that I wrote or at least bugs that I shipped when I shouldn’t have. And there are bugs that I fixed. I fixed those bugs in the 1990s. And so, for an embedded system today to still have that problem or any of those problems means that whatever I did to fix it, wasn’t enough. I didn’t have a way of telling people.”

Ok, what can we do now?

Once Vixie took the audience through the pages of internet history, he asked, “So, what can we learn?” I giggled a bit when he said, “Well, it sure would have been nice to already have an internet when we were building one, because then there would have been something like GitHub instead of an FTP server and a mailing list and a Usenet newsgroup. But in any era, you use what you have and try to anticipate what you are going to have.”

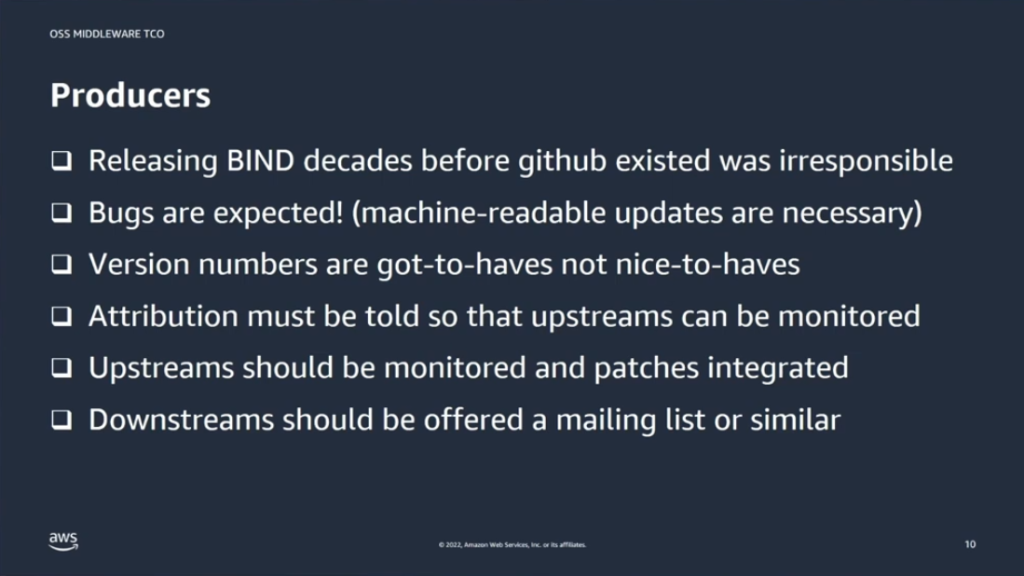

Best Practices for Producers

Vixie’s advice for what we could do now started with recommendations for steps that producers could take to mitigate and reduce code vulnerabilities.

Producers should take the following proactive approach.

- Presume that all software has bugs. “It is the safe position to take,” stated Vixie.

Slide from Vixie’s Keynote at OSS EU: Findings and Recommendations for Producers

- Have a way of shipping changes that are machine-readable. “You can’t depend on a human to monitor a mailing list. It has to be some automation to get the scale necessary to operate.”

- Include version numbers. Version numbers are got-to haves, not nice-to-haves. “People who are depending on you need to know something more than what you thought worked on Tuesday,” Vixie said. “They need an indicator, and that indicator often takes the form of the date – in a year, month, day format. And it doesn’t matter what it is; it just has to uniquely identify the bug (and feature) level of any given piece of software. So, we have put these version numbers in, even if they serve no purpose for us as developers locally.”

- Say where you got code. “It should be in your README files. It should be in your source code comments because you want it to be that if somebody is chasing a bug and they reach that bit of local source code, they’ll understand, ah… this is a local fork, there is an upstream, let’s see if they have fixed this,” Vixie said.

- Automate your own monitoring of these upstream projects. “If there’s a change, then you need to look at it, decide what it means to you. Is it a bug that you also have, or is that part of the code base that you didn’t import? Is that a part that you have completely rewritten? Do you have the same bug but in a different function name or some other local variation of it? This is not optional,” Vixie added.

- Give your downstreams some way to know when you have made a change.

Best Practices for Consumers

Next, Vixie continued by identifying best practices that consumers can follow to help mitigate or reduce vulnerabilities.

Consumers can take the following proactive approach:

Slide from Vixie’s Keynote at OSS EU: Findings and Recommendations for Consumers

- Understand the risks of your external dependencies. To dig a little deeper into the pockets of the problem, Vixie stated, “As a consumer, when you import something, remember that you are also importing everything it depends on. So, when you check your dependencies, you have to do it recursively. You have to go all the way up. Uncontracted dependencies are a dangerous thing. If you are taking free software from somebody and hoping that team doesn’t disband, doesn’t go on vacation, doesn’t maybe have a big blow-up and make a fork and there are two forks, but the one you are using is dead. We have no other choice, we need the software that everybody else is writing, but we have to recognize that such dependencies are an operating risk” and not merely an unconnected benefit.

Side Note: How free software gets expensive

Vixie said, “Orphaned dependencies become things you have to maintain locally, and that is a much higher cost than monitoring the developments that are coming out of other teams. But it is a cost that you will have as these dependencies eventually become outdated. Somebody moves from version 2 to version 3, and you really liked version 2, but it’s dead code. Well, you have to maintain version 2 yourself. That’s expensive. It’s either expensive because you hire enough people and build enough automation, or it’s expensive because you don’t. That’s the choice.“So mostly, we should automatically import the next version of whatever, but it can’t be fully automated; sometimes the license will change from one you could live with to one you can’t. You may have an uncontracted dependency with somebody at some point that decides that they want to get paid. So that is another risk.”

- Depend on known version numbers or version number ranges; say what version number you need. “So, as you become aware that only the version from this one or higher has the fix that you now know that you’ve got to have, you can make sure that you don’t accidentally get an older one. Or it might be a specific version. Only that version is suitable for you, in which case someday that TAR file is going to disappear. And you’re going to worry about whether you have a local copy and what you’re going to do,” Vixie said.

- Avoid creating local forks. “It’s usually better not to have a local fork of something, so you don’t have to maintain it yourself.”

- Monitor and review releases and decide the level of urgency to dedicate to updates. “Every time somebody releases something, open a ticket and make it some engineer’s job to go look at it, see if it’s safe, see if it’s necessary, see if it’s absolutely vital-set -my-hair-on-fire work over the weekend, or we’ll just get to it when we get to it,” Vixie said.

In conclusion, Vixie stated, “If you can’t afford to do these things, then free software is too expensive for you.” And with that, Vixie dropped the mic and walked off the stage. Ok, that didn’t happen, but that would have been the perfect ending to such an impactful statement.

As an “OS Newbie,” a badge ribbon I wore proudly at Open Source Summit EU, I had my first opportunity to get immersed in open source. Attending this conference in my first few months as an Amazonian helped me better understand our industry’s shared successes and challenges. I gained a lot of perspective from several resources and sessions; however, this important lesson taught by Paul Vixie, really stood out for me: free software comes at a cost. How much does it cost? This answer depends on the proactive or reactive choices you make as a consumer or producer of open source code.

Open source software is critical to our future. As we continue to use it to innovate, we must remember to follow the best practices Vixie outlined. And taking advice from a living legend, is worth the expense.

Helpful Security Resources: https://openssf.org/resources/guides/